A common question we hear is “Can parameters be safely passed in URLs to secure web sites? ” The question often arises after a customer has looked at an HTTPS request in HttpWatch and wondered who else can see this data.

For example, let’s pretend to pass a password in a query string parameter using the following secure URL:

https://www.httpwatch.com/?password=mypassword

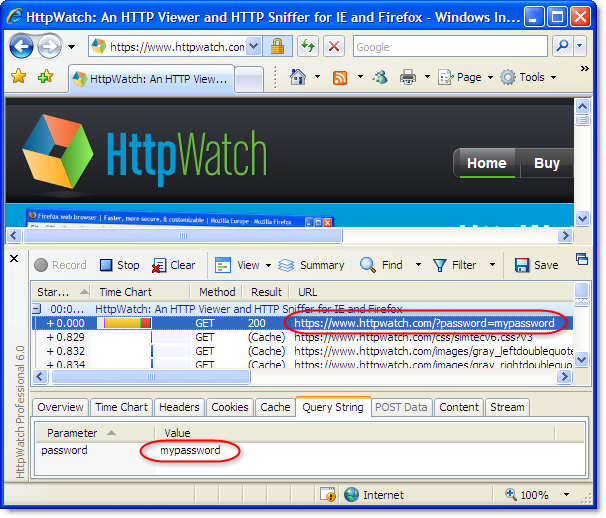

HttpWatch is able to show the contents of a secure request because it is integrated with the browser and can view the data before it is encrypted by the SSL connection used for HTTPS requests:

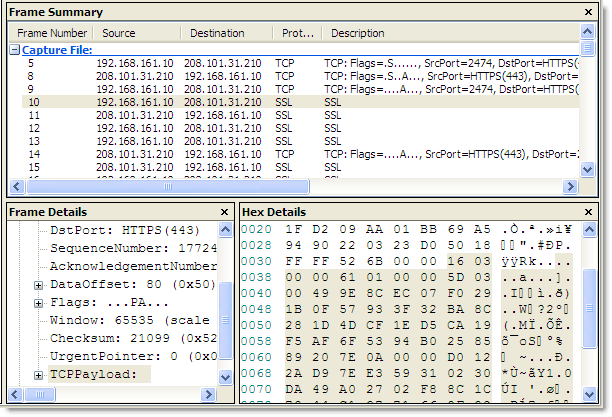

If you look in a network sniffer, like Network Monitor, at the same request you would just see the encrypted data going backwards and forwards. No URLs, headers or content is visible in the packet trace::

You can rely on an HTTPS request being secure so long as:

- No SSL certificate warnings were ignored

- The private key used by the web server to initiate the SSL connection is not available outside of the web server itself.

So at the network level, URL parameters are secure, but there are some other ways in which URL based data can leak:

- URLs are stored in web server logs - typically the whole URL of each request is stored in a server log. This means that any sensitive data in the URL (e.g. a password) is being saved in clear text on the server. Here’s the entry that was stored in the httpwatch.com server log when a query string was used to send a password over HTTPS:

2009-02-20 10:18:27 W3SVC4326 WWW 208.101.31.210 GET /Default.htm password=mypassword 443 ...It’s generally agreed that storing clear text passwords is never a good idea even on the server.

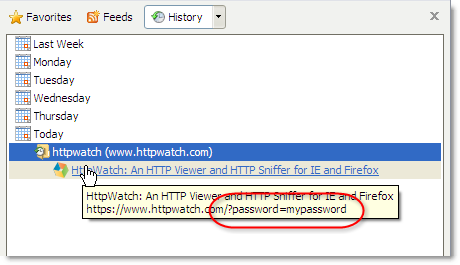

- URLs are stored in the browser history - browsers save URL parameters in their history even if the secure pages themselves are not cached. Here’s the IE history displaying the URL

parameter:

Query string parameters will also be stored if the user creates a bookmark.

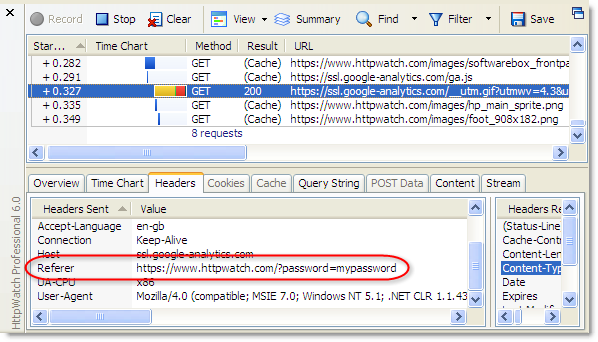

- URLs are passed in Referrer headers - if a secure page uses resources, such as javascript, images or analytics services, the URL is passed in the Referrer request header of each embedded request. Sometimes the query string parameters may be delivered to and stored by third party sites. In HttpWatch you can see that our password query string parameter is being sent across to Google Analytics:

Conclusion

The solution to this problem requires two steps:

- Only pass around sensitive data if absolutely necessary. Once a user is authenticated it is best to identify them with a session ID that has a limited lifetime.

- Use non-persistent, session level cookies to hold session IDs and other private data.

The advantage of using session level cookies to carry this information is that:

- They are not stored in the browsers history or on the disk

- They are usually not stored in server logs

- They are not passed to embedded resources such as images or javascript libraries

- They only apply to the domain and path for which they were issued

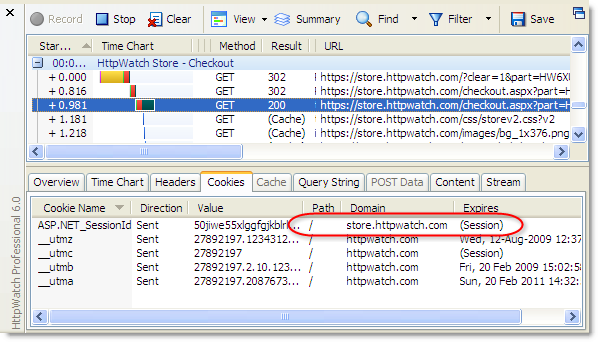

Here’s an example of the ASP.NET session cookie that is used in our online store to identity a user:

Notice that the cookie is limited to the domain store.httpwatch.com and it expires at the end of the browser session (i.e. it is not stored to disk).

You can of course use query string parameters with HTTPS, but don’t use them for anything that could present a security problem. For example, you could safely use them to identity part numbers or types of display like ‘accountview’ or ‘printpage’, but don’t use them for passwords, credit card numbers or other pieces of information that should not be publicly available.

The Firefox Process Model

One of the interesting new features in Google’s Chrome browser is the use of one Windows process per site or tab. This helps to enforce the isolation between tabs and prevents a problem in one tab crashing the whole browser.

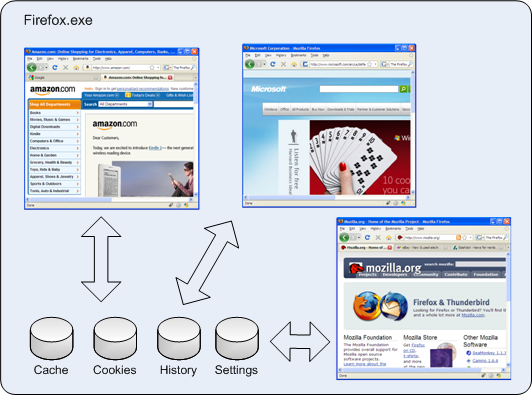

In comparison, Firefox seems to have a simplistic process model on Windows. It doesn’t matter how many tabs or windows you open, or how many times you start Firefox - by default you get one instance of firefox.exe:

In Internet Explorer you can create a separate instance of the browser process just by starting another copy of iexplore.exe.

There are advantages to Firefox’s single process model:

- It uses less system resources per tab compared to creating multiple Windows processes.

- Firefox can use fast in-process data access and syncronization objects when it interacts with the history, cookie and cache data stores.

However, the lack of isolation means that if anything causes a page to crash, you’ll lose all your Firefox tabs and windows. This is mitigated to some degree by Firefox’s ability to restart the browser and reload the set of pages displayed in the previous session.

So what do you do if you are developing an add-on for Firefox or you want to run automated tests in Firefox whilst still using Firefox to browse in the normal way?

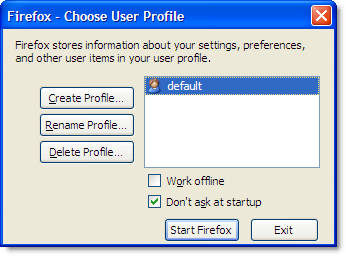

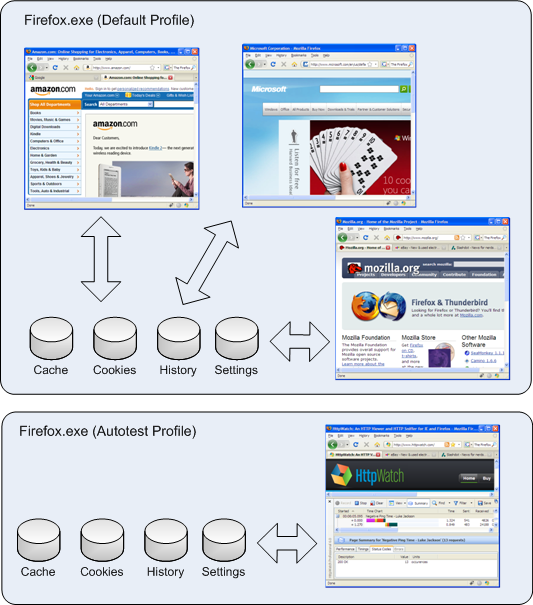

In Firefox, multi-process support is provided through the use of profiles. When Firefox is installed, you automatically get one default profile that contains user settings, browsing history, the browser cache and persistent cookies. Additional profiles can be created using the Firefox Profile Manager.

The Profile Manager is built into Firefox and is started by running this command in Start->Run:

firefox -P -no-remote

The -P flag indicates that the Profile Manager should be started and the -no-remote flag indicates that any running instances of Firefox should be ignored. If you run without this flag and you have already started Firefox, the command will simply open a new Firefox window without displaying the Profile Manager.

The Profile Manager has a simple user interface that allows you to create, delete and rename profiles:

You can start Firefox in a non-default profile by using the following command line:

firefox -P

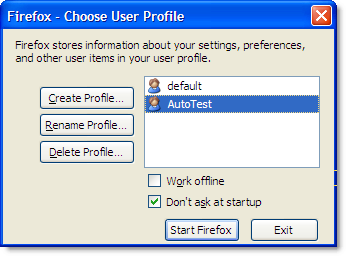

For example, if you created a new profile called AutoTest:

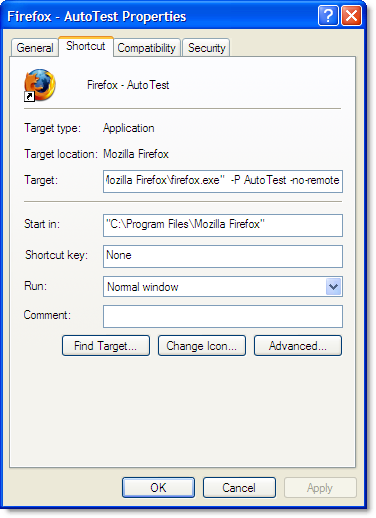

You could set up a shortcut like this to start Firefox in the AutoTest profile:

Each profile uses its own copy of the firefox.exe process, as well as its own settings, browser cache, history and peristent cookies. This provides more isolation than you would achieve by running multiple processes in IE. You can even separately enable or disable add-ons like Firebug or HttpWatch in each profile.

Internet Explorer’s cache and persistent cookies are maintained on a per user basis making it difficult to run separate instances with their own storage and settings. With Firefox you simply use different profiles. For example, you could use your default profile for normal browsing and have a separate profile to use for another purpose such as automated testing:

The HttpWatch automation interface in version 6.0 supports the use of profiles with Firefox. The profile name can be passed to the Attach and New methods of the Firefox plugin object. Passing an empty string indicates that you want to use the default profile.

Here’s a modified version of the page load test that we previously featured. It’s written in C# and uses a non-default profile to run the test:

// Set a reference to the HttpWatch COM library

// to start using the HttpWatch namespace

using HttpWatch;

namespace EmptyCacheTest

{

class Program

{

static void Main(string[] args)

{

string url = "http://www.httpwatch.com";

string profileName = "AutoTest";

Controller controller = new Controller();

// Create an instance of Firefox in the specified profile

Plugin plugin = controller.Firefox.New(profileName);

// Clear out all existing cache entries

plugin.ClearCache();

plugin.Record();

plugin.GotoURL(url);

// Wait for the page to download

controller.Wait(plugin, -1);

plugin.Stop();

// Find the load time for the first page recorded

double pageLoadTimeSecs =

plugin.Log.Pages[0].Entries.Summary.Time;

System.Console.WriteLine( "The empty cache load time for '" +

url + "' was " + pageLoadTimeSecs.ToString() + " secs");

// Uncomment the next line to save the results

// plugin.Log.Save(@"c:\temp\emptytestcache.hwl");

plugin.CloseBrowser();

}

}

}

HttpWatch Featured in ‘High Performance Web Sites’ Course at Stanford

Steve Souders, the web performance evangelist at Google, taught a course last year at Stanford’s Computer Science Department based on his highly regarded ‘High Performance Web Sites’ book. The course materials including slides, notes and labs are available from Steve’s web site.

A video has also been released of the first lecture of the course :

In the first few minutes, Steve uses HttpWatch running in Firefox to compare the performance of competing pairs of web sites including MySpace and Facebook, MSN and AOL, and the Google and Yahoo search engines.

HTTPS Performance Tuning

An often overlooked aspect of web performance tuning is the effect of using the HTTPS protocol to create a secure web site. As applications move from the desktop onto the web, the need for security and privacy means that HTTPS is now heavily used by web sites that need to be responsive enough for every day use.

The tips shown below may help you to avoid some of the common performance and development problems encountered on sites using HTTPS:

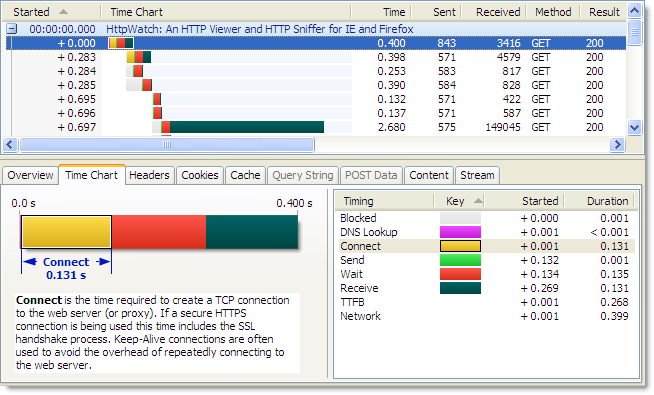

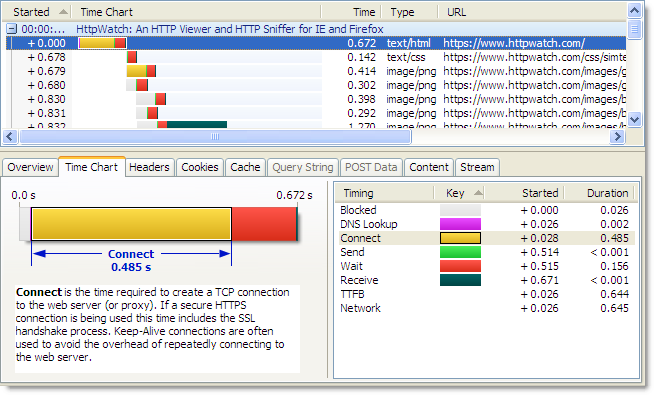

Tip #1: Use Keep-Alive Connections

Whenever a browser accesses a web site it must create one or more TCP connections. That can be in lengthy operation even with normal unsecured HTTP. The use of Keep-Alive connections reduces this overhead by reusing TCP connections for multiple HTTP requests. The screen shot below from HttpWatch shows the TCP connection time over HTTP is approximately 130 milliseconds for our web site when it is accessed from the UK:

Using HTTPS, the lack of Keep-Alive connections can lead to an even larger degradation in performance because an SSL connection also has to be setup once the TCP connection has been made. This requires several roundtrips between the browser and web server to allow them to agree on an encryption key (often referred to as the SSL session key) . The corresponding connection time to the same server using HTTPS is nearly four times longer as it includes the HTTPS overhead:

If a HTTPS connection is re-used the overhead of the both the TCP connection and SSL handshake are avoided.

Some web browsers and servers now allow the re-use of these SSL session keys across connections, but you may not always have control over the web server configuration or the type of browser used.

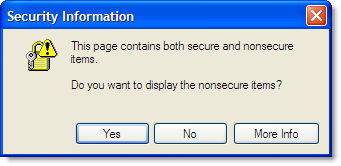

Tip #2: Avoid Mixed Content Warnings

In a previous blog post we talked about the confusing and annoying dialog that is displayed by default if a secure page uses any HTTP resources:

To stop this warning dialog interrupting the page download you need to ensure that everything on the page is accessed over HTTPS. It doesn’t have to be from the same site but it must use HTTPS. For example, the addition of HTTPS support allows the Google Ajax libraries to be safely loaded from secure pages.

Tip #3: Use Persistent Caching For Static Content

If you follow Tip #2 then everything that your page needs, including images, CSS and Javascript, will be accessed over HTTPS. You would normally want to persistently cache static content like this for as long as possible to reduce load on the web site and improve performance when a user revisits your site.

Of course, you wouldn’t want to cache anything on the disk that was specific to the user (e.g. HTML page with account details or a pie chart of their monthly spending). On most pages though, nearly all of the non-HTML content can be safely stored, shared and re-used.

There seems to be some confusion over whether caching is possible with HTTPS. For example, Google say this about Gmail over HTTPS:

You may find that Gmail is considerably slower over the HTTPS connection, because browsers do not cache these pages and must reload the code that makes Gmail work each time you change screens.

Although, 37Signals acknowledge that in-memory caching is possible, they say that persistent caching is not possible:

The problem is that browsers don’t like caching SSL content. So when you have an image or a style sheet on SSL, it’ll generally only be kept in memory and may even be scrubbed from there if the user is low on RAM (though you can kinda get around that).

Even when you do your best to limit the number of style sheets and javascript files and gzip them for delivery, it’s still mighty inefficient and slow to serve them over SSL every single time the user comes back to your site. Even when nothing changed. HTTP caching was supposed to help you with that, but over SSL it’s almost all for naught.

In reality, persistent caching is possible with HTTPS using both IE and Firefox.

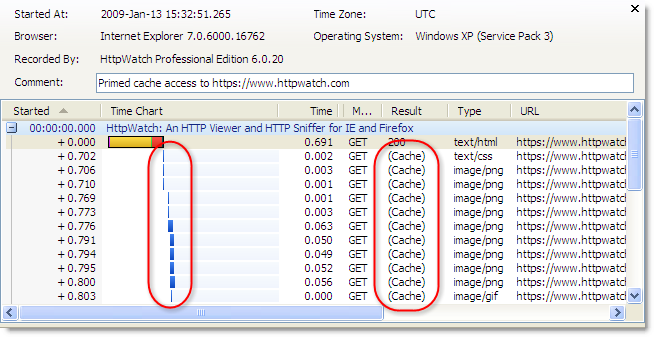

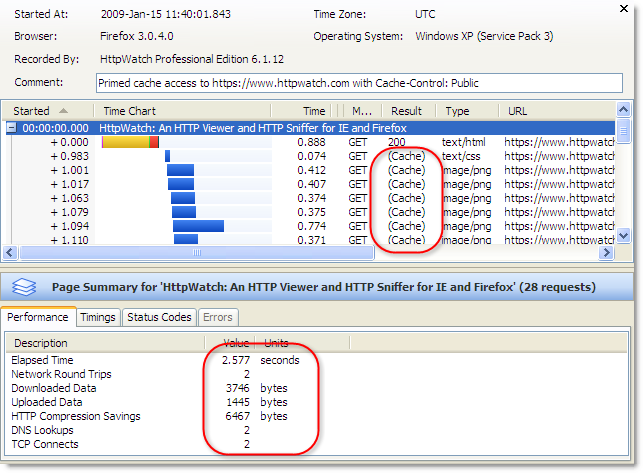

Using HttpWatch you can see if content is loaded from the cache by looking for (Cache) in the Result column or by looking for the blue Cache Read block in the time chart. Here is an example of visiting https://www.httpwatch.com with a primed cache in IE. You can see that all the static resources are reloaded directly from the cache without a round trip to the web server:

If you try this in Firefox 3.0 without adjusting your response headers you will see this instead:

The ‘200′ values in the Result column indicate that the static content is being reloaded even though the site was previously visited and a valid Expires setting was used. Unless you specify otherwise, Firefox will put HTTPS resources into the in-memory cache so that they can only be re-used within a browser session. When Firefox closes the contents of the in-memory cache is lost.

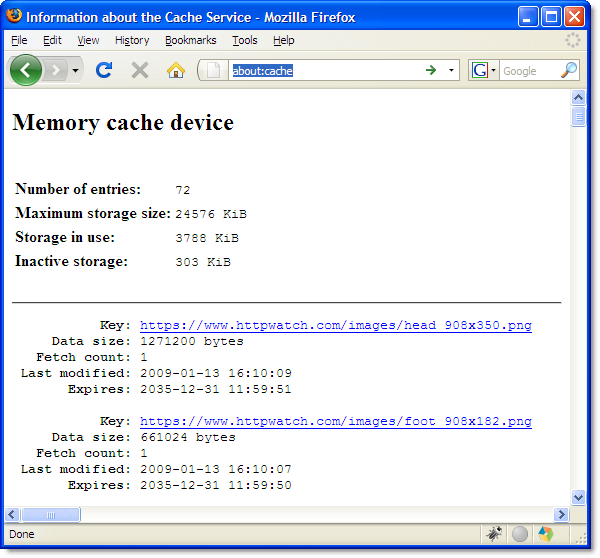

The about:cache page in Firefox confirms that these files are stored using the in-memory cache:

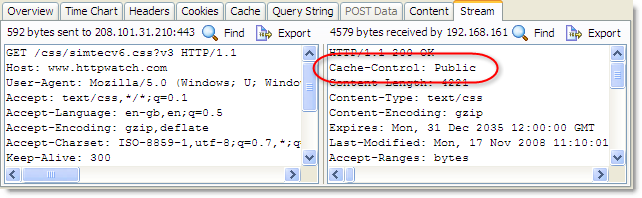

To allow persistent caching of secure content in Firefox you need to set the Cache-Control response header to Public:

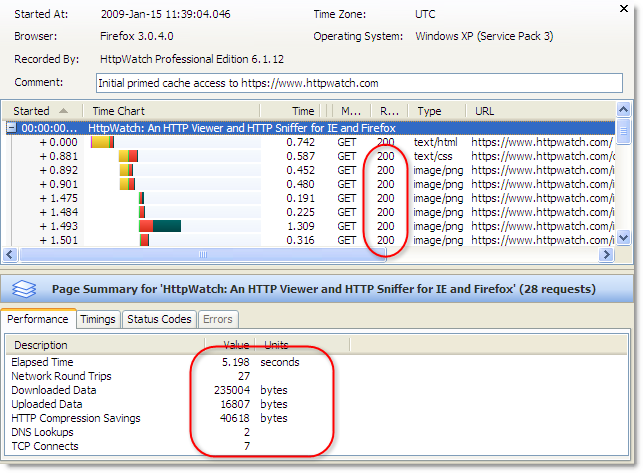

This value moves HTTPS based content into the persistent Firefox disk cache and in the case of https://www.httpwatch.com it more than halves the page load time due to the decrease in network round trips and TCP/SSL connections:

The Public cache setting is normally used to indicate that the content is not per user and can be safely stored in shared caches such as HTTP proxies. With HTTPS this is meaningless as proxies are unable to see the contents of HTTPS requests. So Firefox cleverly uses this header to control whether the content is stored persistently to the disk.

This feature was only added in Firefox version 3.0 so it won’t work with older versions. Fortunately, the take up version 3.0 is reported to be much faster than IE 6 to 7.

Tip #4: Use an HTTPS Aware Sniffer

A network sniffer is an invaluable tool for optimizing and debugging any client server application. But if you use a network level tool like Netmon or WireShark you cannot view HTTPS requests without access to the private key that the web site uses to encrypt SSL connections.

A network sniffer is an invaluable tool for optimizing and debugging any client server application. But if you use a network level tool like Netmon or WireShark you cannot view HTTPS requests without access to the private key that the web site uses to encrypt SSL connections.

Often organizations will not allow the use of private keys outside of a production environment, and even if you have administrative access to your web site, getting the private key and using it to decrypt network traffic is not an easy task.

HttpWatch was originally born out of the frustration of trying to debug secure sites. Viewing HTTPS traffic in HttpWatch is easy as it integrates directly with IE or Firefox, and therefore has access to the unencrypted version of the data that is transmitted over HTTPS.

The free Basic Edition shows high level data and performance time charts for any site, and lower level data for a number of well know sites including Amazon.com, eBay.com, Google.com and HttpWatch.com . For example, you can try it with HTTPS by going to https://www.httpwatch.com .

EDIT: This post was updated to show that persistent caching of HTTPS based content is only available in Firefox version 3.0 onwards.

HttpWatch is a Jolt Finalist!

We are pleased to announce that HttpWatch is a finalist in the Utilities category of the 19th Jolt Product Excellence Awards.

We are pleased to announce that HttpWatch is a finalist in the Utilities category of the 19th Jolt Product Excellence Awards.

The Jolt Product Excellence Awards are run by CMP Technology’s Dr. Dobb’s. For the past 18 years, the Jolt awards have been presented annually to showcase products that have “jolted” the industry with their significance and made the task of creating software faster, easier, and more efficient.

The final winner in each category will be announced March 11, 2009 at the SD West conference in Santa Clara, CA.

|